Before We Get Started

This article series covers implementing a custom cache system for Statamic, which provides near-static cache response times while preserving some of the text-editor workflows that make Statamic great. The cache system we will build together may only be appropriate for some projects and involves more legwork and time investment to get running smoothly.

If you're looking for something simpler, please check out these fantastic features that Statamic provides:

Throughout this article series, we will explore creating a custom cache system for Statamic. Statamic already provides a wide range of caching systems and utilities, from static HTML file caching, half-cache, and specialized caching tags to a full-fledged static site generator. The availability of all these options begs the question: Why develop another caching system?

The short answer is that sometimes it's fun to experiment with building a system on your own; the longer answer will be the remainder of this article.

The raw performance of static sites, whether using Statamic's static HTML cache or the static site generator, is very attractive but relies on additional deployment workflows, in the case of the static site generator, or invalidation depends on events triggered by the Control Panel. While the latter is not a deal breaker for most users, I enjoy working with Statamic from my text editor for most day-to-day tasks.

Historically, to use the text editor for most of my content management, I've had to rely on Statamic's half-measure caching system and the Stache Watcher to pick up changes at the file-system level; this works just fine for most sites, but the performance still pales compared to the speed of a statically generated site or the static HTML cache. And to be very clear about the goals of this article, this article is not about how slow Statamic is (it isn't) or a drag on their cache systems implementations (they work great); it's more of a documentation of my thought process on making something work the way I want it to. In that sense, this journey shows how flexible Statamic can be.

#What We're Going to Build

The goal of our custom caching system will be to get as close to the performance of the static HTML cache as we reasonably can without having to rely on Control Panel events for most cache invalidation, as well as support a few additional cache invalidation scenarios. Our cache should be able to invalidate itself under the following conditions automatically without requiring the Control Panel:

- The entry was updated or removed;

- Other content that appears within the final response was edited or removed;

- Any template or partial used to generate the final response was updated or removed

Some types of cache invalidation will still require either manual cache invalidation or rely on Control Panel events. Examples of these scenarios include creating new collection entries or entirely new collections. However, I will still consider these current invalidation limitations a success since they are relatively rare in my workflows.

In addition to our custom flat-file invalidation logic, we will also be adding a large number of helpful features to our custom cache system:

- Manual Cache Labeling: We can label all our cached responses, allowing us to invalidate entire content categories quickly and efficiently. For example, we could conditionally label specific parts of our template and purge only cached content containing those labels.

- Automatic Cache Labeling: Besides manual cache labeling, our cache system will automatically apply labels under many scenarios. An example here is that all pages that utilize Statamic's collection tag to generate a listing would automatically receive a unique label, letting our cache system invalidate that page if an entry within those collections is updated, removed, or created.

- Cache Bypass Detection: We can conditionally perform actions in our templates based on whether or not the caching system is actively being bypassed.

- Safe and Instant Invalidation: Our cache system's invalidation logic will not rely on our cache files being deleted, helping to prevent several edge cases that can arise on busy websites. This design choice will also help us to invalidate every cached response instantly (we will get into this later).

We will also be imposing a few limitations on our cache implementation to simplify the development of the cache system and make it easier to maintain our site in the long term. These limitations may make our custom caching system unsuitable for some Statamic projects, but that is how it goes with bespoke designs.

The first limitation we will impose is that our cache system will ignore any request that includes any URL parameters. This design choice solves two problems:

- We don't have to worry about complicated cache invalidation logic related to URL parameters;

- We no longer have to worry about someone spamming our site with thousands of bogus requests with different URL parameters and filling up our disk space. These requests will bypass the cache system and consume resources, but at that point, we could look into other ways to limit resource consumption, such as rate limiting.

The second limitation is that if the markup of a page includes Laravel's cross-site request forgery (CSRF) token, that page will not be cached. Throughout the years, one of the most common pain points surrounding the caching of content generated by a system utilizing Laravel has been how to deal with these tokens.

Our cache system automatically excludes content that includes the token. However, if you go through the work to make things work by requesting the token through alternative means, such as populating the token using JavaScript and API, the page will be cached to help improve initial response times.

The third limitation we will impose is that the cache system will automatically disable itself if a user is authenticated; this will allow us to serve content blazingly fast to non-authenticated users, which works great for content-heavy sites without user interaction. For other sites that provide user sections or have heavy administrator interaction, this setup helps to simplify a few things:

- Bypassing the cache within our sites for authenticated users becomes much simpler since it is handled directly by the caching system.

- We do not have to worry about static caching interfering with Control Panel features like the Live Preview feature.

While our custom cache system will disable itself for authenticated users, Statamic's other caching systems, such as the cache tag, will still be available to us should we need it.

The fourth and final limitation we will impose on our design is that it will only work with the flat-file content storage driver. One of Statamic's strongest selling points is its ability to work without a database. Our cache system will only interact with certain features available with its flat-file storage drivers. This limitation is likely the largest deal breaker for projects that want to use the Eloquent driver to store their entries.

#Experimenting with Response Times

Before implementing our cache system, we will need time to understand what we are in for. We will experiment with some general response times of a new Statamic website. Our testing and benchmarking will not be a highly involved or rigid process since, right now, we are only concerned with relative response times to get a sense of where we can implement our cache system.

I like using Statamic's Cool Writings Starter Kit whenever I need a quick site to experiment with or validate an idea. If you want to follow along, I created my local site by running the following command:

1statamic new cache_dev statamic/starter-kit-cool-writings

My local Windows/WSL2 environment could be more optimized. Still, the current state helps detect bottlenecks more quickly. It can be challenging to spot slow code if the environment and hardware execute even the worst code incredibly fast.

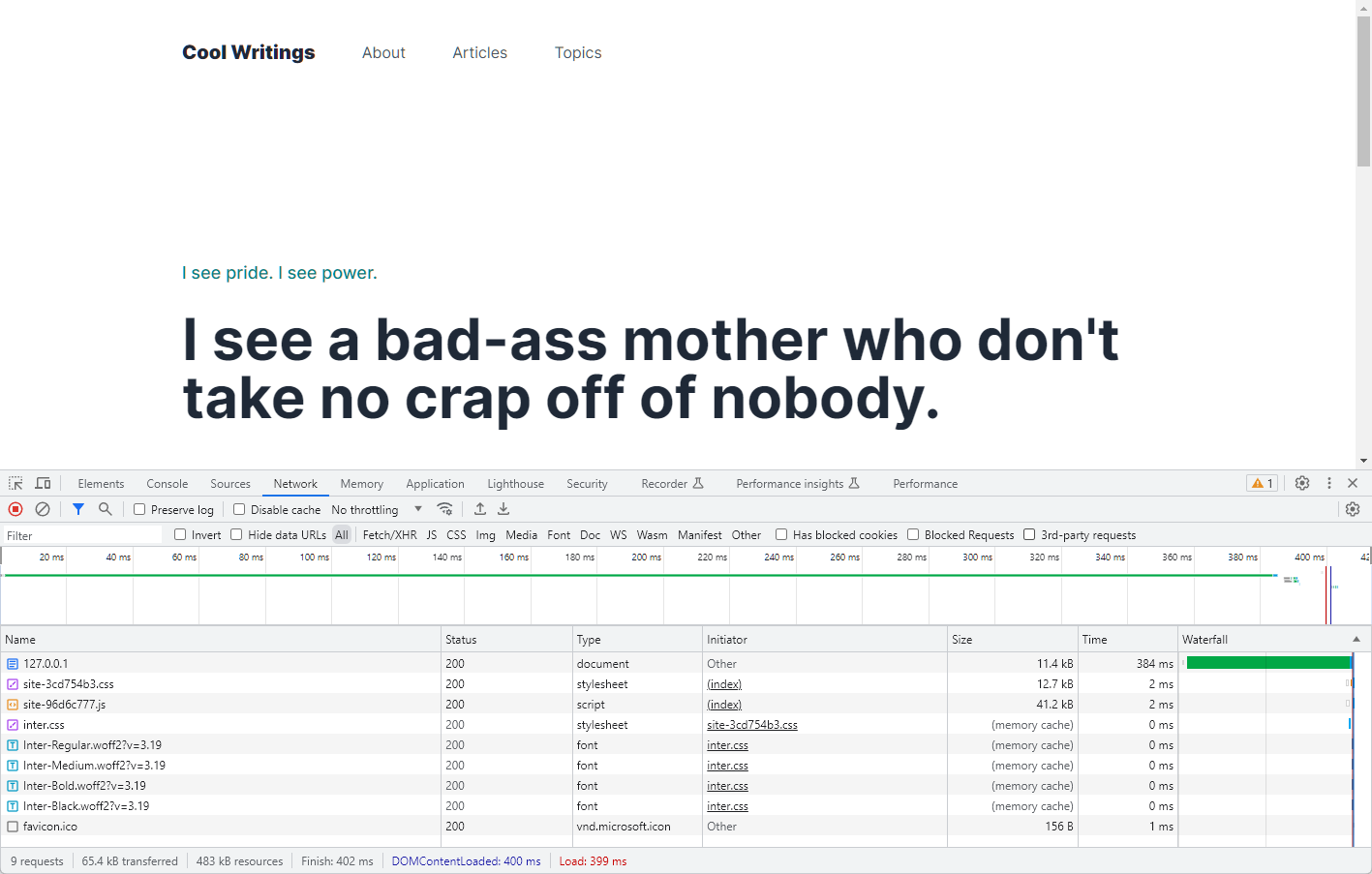

Starting the site and refreshing a few times, the response time seems to settle at around 380 milliseconds (again, this process is just to observe some relative numbers):

That's not terrible, but let's see what happens if we wrap the entirety of the home.antlers.html template inside a cache tag pair:

1{{ cache }}

2<div class="max-w-5xl mx-auto relative py-16 lg:pt-40 lg:pb-32">

3 <article class="content">

4 <p class="font-medium text-lg text-teal">{{ subtitle }}</p>

5 <h1>{{ content }}</h1>

6 </article>

7</div>

8

9<div class="max-w-2xl mx-auto mb-32">

10 {{ collection:articles limit="5" as="articles" }}

11 {{ if no_results }}

12 <h2 class="text-3xl italic tracking-tight">Feel the rhythm! Feel the rhyme! Get on up, it's writing time! Cool writings!</h2>

13 {{ /if }}

14

15 {{ articles }}

16 <div class="mb-16">

17 <h2 class="mb-4">

18 <a href="{{ url }}" class="text-2xl hover:text-teal tracking-tight leading-tight font-bold">

19 {{ title | widont }}

20 </a>

21 </h2>

22 <p class="text-gray-600">

23 <span class="text-gray-800 text-sm uppercase tracking-widest font-medium">{{ date }}</span> —

24 {{ excerpt | widont ?? content | strip_tags | safe_truncate(150, '...') }}

25 </p>

26 </div>

27 {{ /articles }}

28 {{ /collection:articles }}

29</div>

30{{ /cache }}

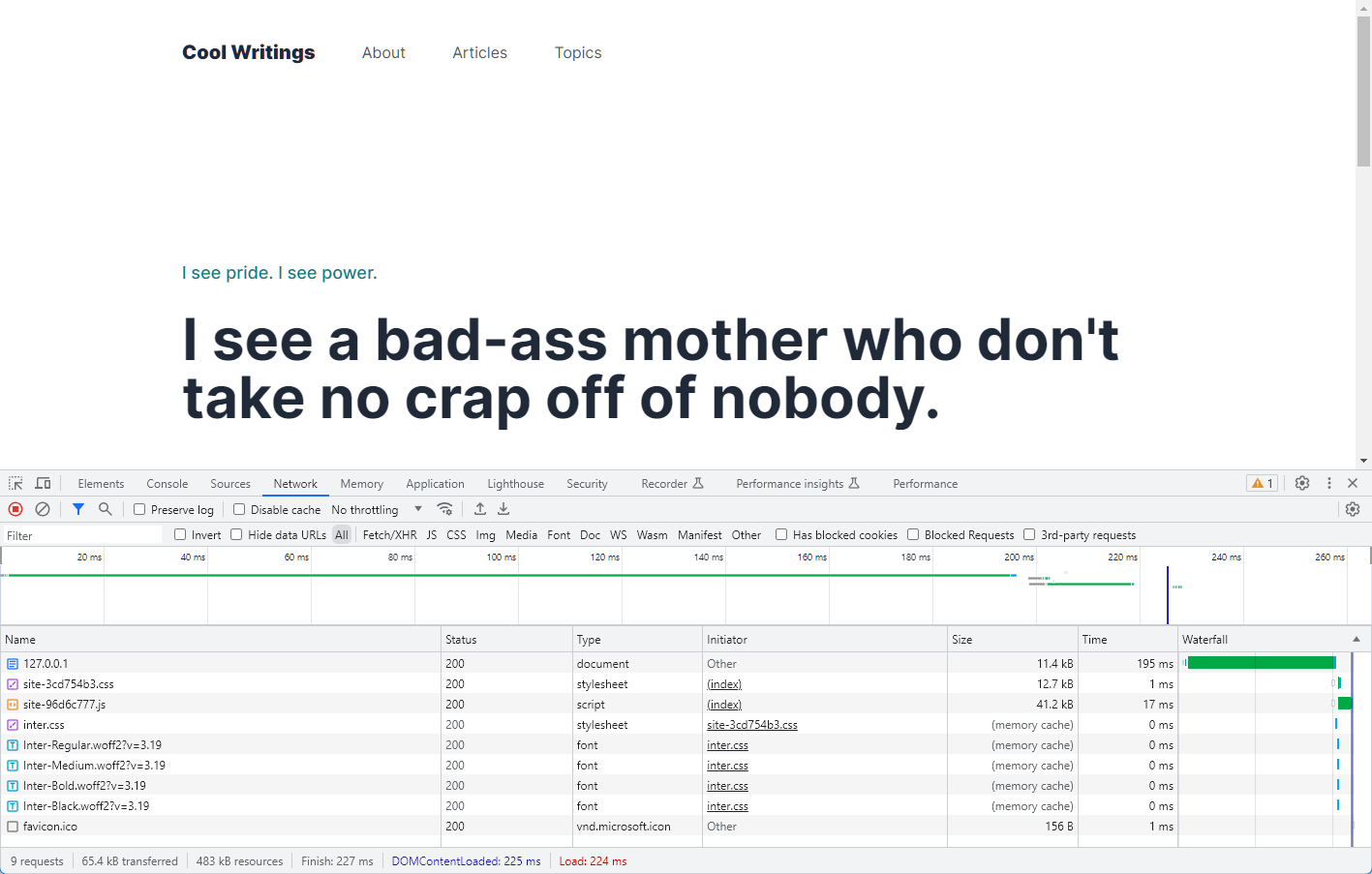

Rerunning our site produces some peculiar results. Sometimes, the response times jump back up to around 380 milliseconds, but I'm typically seeing response times around 190 milliseconds:

Overall, that is an improvement, but we still need to get closer to the speeds of the static cache.

For our next experiment, we will disable the Stache watcher, enable half-measure static caching, and see what happens. Inside the .env environment file, let us set the following values:

1STATAMIC_STACHE_WATCHER=false

2STATAMIC_STATIC_CACHING_STRATEGY=half

Clearing our site's cache and refreshing the page a few times yields much better response times:

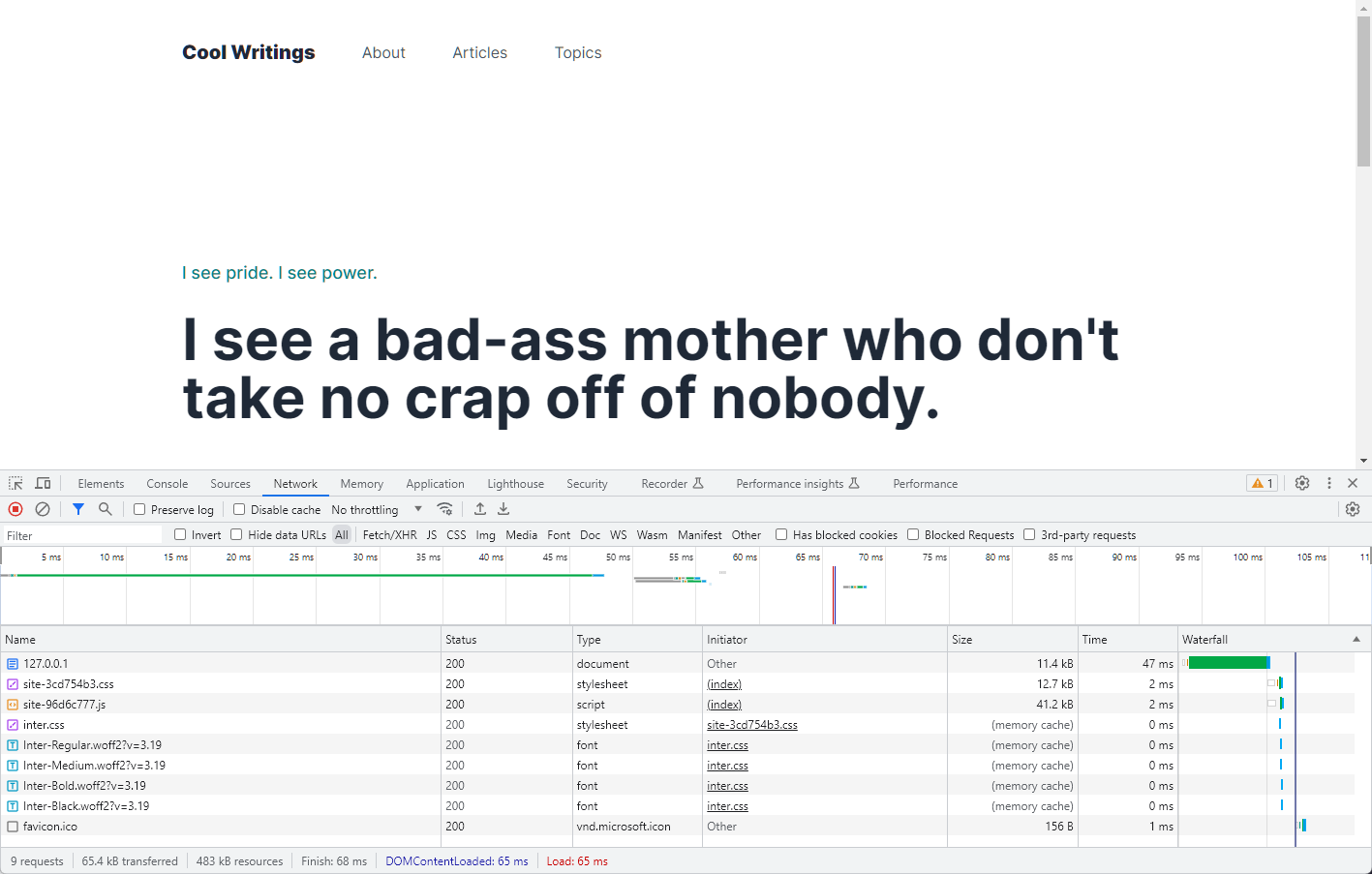

On average, the response time has dropped to around 50 milliseconds. That is quite an improvement! However, if we were to update the content from our text editor, we would have to clear our site's cache for the live site to reflect the changes. While this isn't the worst thing in the world, let us see if we can develop a way to drop the response times even more and avoid having to manually clear the cache.

Following the documentation and general guidance, our next steps to improve site performance would be to look into the static HTML cache driver or the static site generator. However, the point of our journey is to see what we can do without those; it is at this point we need to start looking for performance savings in unconventional places.

When I started working on this cache experiment about eight months ago, I was curious about the response times if I short-circuited Laravel and Statamic entirely. To do this, we need to look at where everything starts when serving our web requests, the public/index.php file.

The first thing I did was to test the overhead of Composer itself; to do this, I added the following to my public/index.php file:

1<?php

2

3use Illuminate\Contracts\Http\Kernel;

4use Illuminate\Http\Request;

5

6define('LARAVEL_START', microtime(true));

7

8/*

9|--------------------------------------------------------------------------

10| Check If The Application Is Under Maintenance

11|--------------------------------------------------------------------------

12|

13| If the application is in maintenance / demo mode via the "down" command

14| we will load this file so that any pre-rendered content can be shown

15| instead of starting the framework, which could cause an exception.

16|

17*/

18

19

20if (file_exists($maintenance = __DIR__.'/../storage/framework/maintenance.php')) {

21 require $maintenance;

22}

23

24/*

25|--------------------------------------------------------------------------

26| Register The Auto Loader

27|--------------------------------------------------------------------------

28|

29| Composer provides a convenient, automatically generated class loader for

30| this application. We just need to utilize it! We'll simply require it

31| into the script here so we don't need to manually load our classes.

32|

33*/

34

35require __DIR__.'/../vendor/autoload.php';

36

37

38echo 'Hello, world!'; 39return;

40

41// ...

After refreshing the page a few times, the response time was bouncing between 8 milliseconds and 40 milliseconds. I found it interesting that the numbers bounced around quite a bit, so I then made the change to exit before I included Composer.

The response time was consistently between 1 and 2 milliseconds on my local development machine after this change. We can't compare this to our earlier response times since our changes are not doing anything beneficial. However, these tests show that there are opportunities for significant performance gains if we can bypass Laravel and Statamic entirely. There are some severe consequences of short-circuiting both of these systems, however.

To start, we will no longer be able to rely on any of Laravel's features or Statamic's. Our cache layer will need to be able to operate independently of them. Additionally, exiting the public/index.php file before Composer is available also means that we cannot easily rely on any third-party dependencies.

Another challenge for future us with this setup is that our cache system will also need a way to invalidate itself without asking Statamic for help.

#Experimentation and Cache Design

In the last section, we explored the relative response times of different Statamic caching strategies and experimented with the response times of short-circuiting both Laravel and Statamic. We also discovered that the response times resulting from exiting public/index.php before Laravel and Statamic are loaded are incredibly speedy.

Our main challenge will be implementing a cache system that can operate independently of Laravel, Statamic, and any other third-party dependencies; this could feel intimidating, primarily if you are used to always working within the context of a framework, but sometimes it's nice to do "vanilla" PHP development.

To start the actual development of our cache system, we will begin by creating the scaffolding for our cache manager and updating our public/index.php file to include the changes required to interact with our cache.

The first step will be to create a file at app/HybridCache/Manager.php.

In app/HybridCache/Manager.php:

1<?php

2

3namespace App\HybridCache;

4

5class Manager

6{

7 public function canHandle(): bool

8 {

9 return true;

10 }

11

12 public function sendCachedResponse(): void

13 {

14

15 }

16}

I am choosing to name this cache system "Hybrid Cache" since it sits somewhere between Statamic's static and half-cache methods in how it behaves and operates. With our hybrid cache manager class scaffolded, we can update the public/index.php to include the following changes after the check for Laravel's maintenance page:

In public/index.php:

1<?php

2

3use Illuminate\Contracts\Http\Kernel;

4use Illuminate\Http\Request;

5

6define('LARAVEL_START', microtime(true));

7

8/*

9|--------------------------------------------------------------------------

10| Check If The Application Is Under Maintenance

11|--------------------------------------------------------------------------

12|

13| If the application is in maintenance / demo mode via the "down" command

14| we will load this file so that any pre-rendered content can be shown

15| instead of starting the framework, which could cause an exception.

16|

17*/

18

19if (file_exists($maintenance = __DIR__.'/../storage/framework/maintenance.php')) {

20 require $maintenance;

21}

22

23require '../app/HybridCache/Manager.php'; 24

25$cacheManager = new \App\HybridCache\Manager();

26

27if ($cacheManager->canHandle()) {

28 $cacheManager->sendCachedResponse();

29}

Our canHandle method will be responsible for determining if the cache system is capable of handling the current request. An example of a request the cache system shouldn't handle later would be any visits to the Statamic Control Panel or requests not already cached. The sendCachedResponse method will be responsible for serving content from the cache later on.

Notice that we are using PHP's require function; if we were to move the location of our cache implementation, we would need to update this line. We do it this way to avoid loading Composer if the cache can handle the current request. If you want to implement something similar in your projects, adjust this logic to suit your needs better. The next thing we will work to do is generate a simple cached response for all pages to get started. We will work on ignoring specific requests and cache invalidation later.

We need a place to store our cached responses, and Laravel's storage directory seems like a great place to do that. Whenever I need to interact with a filesystem directory, I like to ensure the directory exists, typically within a service provider. Because our cache will have quite a few moving places later on, we will create a service provider instance just for the hybrid cache system at app/HybridCache/Providers/HybridCacheServiceProvider.php:

In app/HybridCache/Providers/HybridCacheServiceProvider.php:

1<?php

2

3namespace App\HybridCache\Providers;

4

5use Illuminate\Support\ServiceProvider;

6

7class HybridCacheServiceProvider extends ServiceProvider

8{

9 public function boot()

10 {

11 $cacheStoragePath = storage_path('hybrid-cache');

12

13 if (! file_exists($cacheStoragePath)) {

14 mkdir(storage_path('hybrid-cache'), 0755, true);

15 }

16 }

17}

For our service provider to be invoked, we will also need to update our config/app.php configuration file and add our new provider to the providers array:

1<?php

2

3return [

4

5 // ...

6

7 'providers' => ServiceProvider::defaultProviders()->merge([

8 /*

9 * Package Service Providers...

10 */

11

12 /*

13 * Application Service Providers...

14 */

+ App\HybridCache\Providers\HybridCacheServiceProvider::class, 16

17 App\Providers\AppServiceProvider::class,

18 App\Providers\AuthServiceProvider::class,

19 // App\Providers\BroadcastServiceProvider::class,

20 App\Providers\EventServiceProvider::class,

21 App\Providers\RouteServiceProvider::class,

22 ])->toArray(),

23

24 // ...

25

26];

With our service provider created and registered, if we visit our website now, we should see a new directory made for us at storage/hybrid-cache.

To continue our testing and idea validation, we next want to cache all responses and serve them from our custom hybrid cache manager. We will not focus on filtering requests or invalidation at this point as we are only interested in generating the cache file and being able to read from it without Laravel or Statamic loaded successfully.

To create a cache file for our responses, we must intercept each request before sending it to the client. We can do this using Laravel's ResponsePrepared event, which is fired after a response has been created but before it is sent to the client.

While Laravel provides an event service provider within the App\Providers namespace, we will create a dedicated service provider to manage our events for the hybrid cache system to help keep things organized, as we will end up having many event listeners by the time we implement the cache.

Let's create the following listener implementation within app/HybridCache/Listeners/ResponsePreparedListener.php:

In app/HybridCache/Listeners/ResponsePreparedListener.php:

1<?php

2

3namespace App\HybridCache\Listeners;

4

5use Illuminate\Routing\Events\ResponsePrepared;

6

7class ResponsePreparedListener

8{

9 public function handle(ResponsePrepared $event)

10 {

11 $content = $event->response->getContent();

12 $cachePath = storage_path('hybrid-cache/test.txt');

13

14 file_put_contents($cachePath, $content);

15 }

16}

Laravel's ResponsePrepared event exposes the HTTP response through its $response public member. We can call the getContent method to get the response's string contents, which we store within our hybrid-cache directory.

Now that we have our event listener implementation, we can create our event service provider at app/HybridCache/Providers/HybridCacheEventServiceProvider.php:

In app/HybridCache/Providers/HybridCacheEventServiceProvider.php:

1<?php

2

3namespace App\HybridCache\Providers;

4

5use App\HybridCache\Listeners\ResponsePreparedListener;

6use Illuminate\Foundation\Support\Providers\EventServiceProvider;

7use Illuminate\Routing\Events\ResponsePrepared;

8

9class HybridCacheEventServiceProvider extends EventServiceProvider

10{

11 /**

12 * The event to listener mappings for the application.

13 *

14 * @var array<class-string, array<int, class-string>>

15 */

16 protected $listen = [

17 ResponsePrepared::class => [

18 ResponsePreparedListener::class,

19 ],

20 ];

21}

Like the other service provider we created, we must update our config/app.php file to include our new service provider:

In config/app.php:

1<?php

2

3return [

4

5 // ...

6

7 'providers' => ServiceProvider::defaultProviders()->merge([

8 /*

9 * Package Service Providers...

10 */

11

12 /*

13 * Application Service Providers...

14 */

15 App\HybridCache\Providers\HybridCacheServiceProvider::class,

+ App\HybridCache\Providers\HybridCacheEventServiceProvider::class, 17

18 App\Providers\AppServiceProvider::class,

19 App\Providers\AuthServiceProvider::class,

20 // App\Providers\BroadcastServiceProvider::class,

21 App\Providers\EventServiceProvider::class,

22 App\Providers\RouteServiceProvider::class,

23 ])->toArray(),

24

25 // ...

26

27];

If we were to visit any page on our site now, we should see a new file created within our storage/hybrid-cache directory containing the content of the last page we visited; this is pretty cool in its way but is essentially useless in the grand scheme of things. Let's update our App\HybridCache\Manager implementation to make things more interesting by returning the contents of our cache file.

In app/HybridCache/Manager.php:

1<?php

2

3namespace App\HybridCache;

4

5class Manager

6{

7 public function canHandle(): bool

8 {

9 return true;

10 }

11

12 public function sendCachedResponse(): void

13 {

14 $cachePath = __DIR__.'/../../storage/hybrid-cache/test.txt';

15

16 if (! file_exists($cachePath)) {

17 return;

18 }

19

20 echo file_get_contents($cachePath);

21 exit;

22 }

23}

A crucial thing we need to remember when implementing any method that will be used within our public/index.php file is that Composer, Laravel, and Statamic will not be available to us. For this reason, we are manually constructing the relative path to our hybrid-cache directory instead of utilizing Laravel's storage_path helper function.

Visiting any page on our site should now display the same content, regardless of the URL. While every request now returns the same content, it quickly does so. We now have the basic setup for our custom cache implementation. Still, we must start working through the more intricate details, like excluding pages from the cache and cache invalidation.

#Organization, Singletons, and Facades

Before going much further, we will take some time to get some structural changes and organization done. As we progress further along the development of our cache system, we will interact with our App\HybridCache\Manager implementation and from many diverse places within the codebase. Because the cache's current state is critical, regardless of which part of the request/response lifecycle we are in, we will register our Manager class as a singleton within Laravel's service container.

When requesting a dependency from the service container registered as a singleton, we will receive the same instance back each time. Utilizing this design pattern will allow each disparate section of our codebase to use the same Manager instance and, thus, the same cache state without worrying about passing an explicit object instance or state around. We could over-engineer things by having global state management, but at that point, we're introducing needless complexity into an already complicated problem.

However, remember that we are exiting our public/index.php file early. Due to this, we can no longer rely on Laravel's service container to return us an instance of our Manager class. Furthermore, if we create an instance of our cache manager within public/index.php, we must use the same instance elsewhere if we have a cache miss; this might seem like a challenging problem, but we can take advantage of PHP's static properties to help out here.

We will start by updating app/HybridCache/Manager.php to add a new constructor and static property:

In app/HybridCache/Manager.php:

1<?php

2

3namespace App\HybridCache;

4

5class Manager

6{

7 public static ?Manager $instance = null; 8

9 public function __construct()

10 {

11 self::$instance = $this;

12 }

13

14 // ...

15}

After our changes, the only thing our constructor currently does is set the static $instance property to itself. We can continue explicitly creating our Manager instance within public/index.php without worrying about the service container implementation that happens elsewhere. With this out of the way, we can now update our cache's service provider.

In app/HybridCache/Providers/HybridCacheServiceProvider.php:

1<?php

2

3namespace App\HybridCache\Providers;

4

5use App\HybridCache\Manager; 6use Illuminate\Support\ServiceProvider;

7

8class HybridCacheServiceProvider extends ServiceProvider

9{

10 public function register() 11 {

12 $this->app->singleton(Manager::class, function () {

13 if (Manager::$instance != null) {

14 return Manager::$instance;

15 }

16

17 return new Manager();

18 });

19 }

20

21 public function boot()

22 {

23 $cacheStoragePath = storage_path('hybrid-cache');

24

25 if (! file_exists($cacheStoragePath)) {

26 mkdir(storage_path('hybrid-cache'), 0755, true);

27 }

28 }

29}

Our singleton callback function first checks if the $instance static property on our Manager class has a value; if it has, we return it instead of creating a new instance. Including this check will allow us to seamlessly utilize any instance created within our public/index.php file without overthinking things.

As noted earlier, many disparate parts of our site's codebase will interact with our cache manager. We could explicitly add our cache manager as a dependency of each class or section of our codebase later, but since we will be adjusting the behavior of many of Statamic's features, having to redeclare all of their dependencies to inject a cache instance feels obnoxious. To get around this problem, we will leverage Laravel's facade feature.

Facades let us interact with a class implementation through a static method API; even better, facades will resolve class implementations from the application service container.

Many words have been written over the years about how facades are bad and shouldn't be used for one reason or another; if that's your opinion, feel free not to use them - it's just code at the end of the day, and there are more meaningful hills to die on. We will create a custom facade for our cache and utilize it throughout the remainder of the article because it really will simplify some of the things we need to do.

In app/HybridCache/Facades/HybridCache.php:

1<?php

2

3namespace App\HybridCache\Facades;

4

5use App\HybridCache\Manager;

6use Illuminate\Support\Facades\Facade;

7

8/**

9 * @method static bool canHandle()

10 * @method static void sendCachedResponse()

11 *

12 * @see \App\HybridCache\Facades\HybridCache

13 */

14class HybridCache extends Facade

15{

16 protected static function getFacadeAccessor()

17 {

18 return Manager::class;

19 }

20}

Adding our facade isn't beneficial right now since we cannot refactor our public/index.php to use it (Laravel's service container is unavailable to us at that point). We will be making extensive use of it later.

We are now done with the overall organization of our cache system and can start implementing some of the fun stuff.

Get the PDF version on LeanPub Grab the example code on GitHub Proceed to Creating a Hybrid Cache System for Statamic: Part Two∎